This blog post is the first in a series of articles on the topic of artificial intelligence.— Contrary to the views of various “experts” from the past, AI has not disappeared; instead, it is gaining more significance in everyday life, particularly through AI chatbots. ChatGPT is perhaps the most widely used example.

Most users might be using an online version — either free or subscription-based. However, it is also possible to download a Large Language Model (LLM). With an application that provides an input interface, this model can then be used locally.

What is Ollama?

There are tools available now that simplify the process of downloading and using, or managing, the local AI models. One such tool is the free program Ollama. Originally, it was a command-line program, meaning it could only be executed in the Terminal. However, a graphical user interface is now also available.

The Terminal app is located in the “Utilities” folder, a subfolder of the Applications folder.

> Applications > UtilitiesLike any other program, the Terminal app can also be launched via the Spotlight Search. A click on the magnifying glass icon in the menu bar or the keyboard shortcut command + spacebar starts the Spotlight Search. In the opening search field, type “Terminal” to start the application. (If you want to learn more about the Terminal and the usage of the shell in macOS, I recommend the book macOS Shell for Beginners.)

Installing Ollama

Before we can use Ollama in the Terminal, the program must be downloaded. Visit the Ollama project website and click on “Download”. (Ollama is available for macOS, Linux, and Windows. This blog post covers its usage on a Mac. The usage on a Linux or Windows system is very similar.)

After downloading Ollama, install it in the usual way for macOS applications, i.e., copy the program into the Applications folder.

Downloading LLM with Ollama

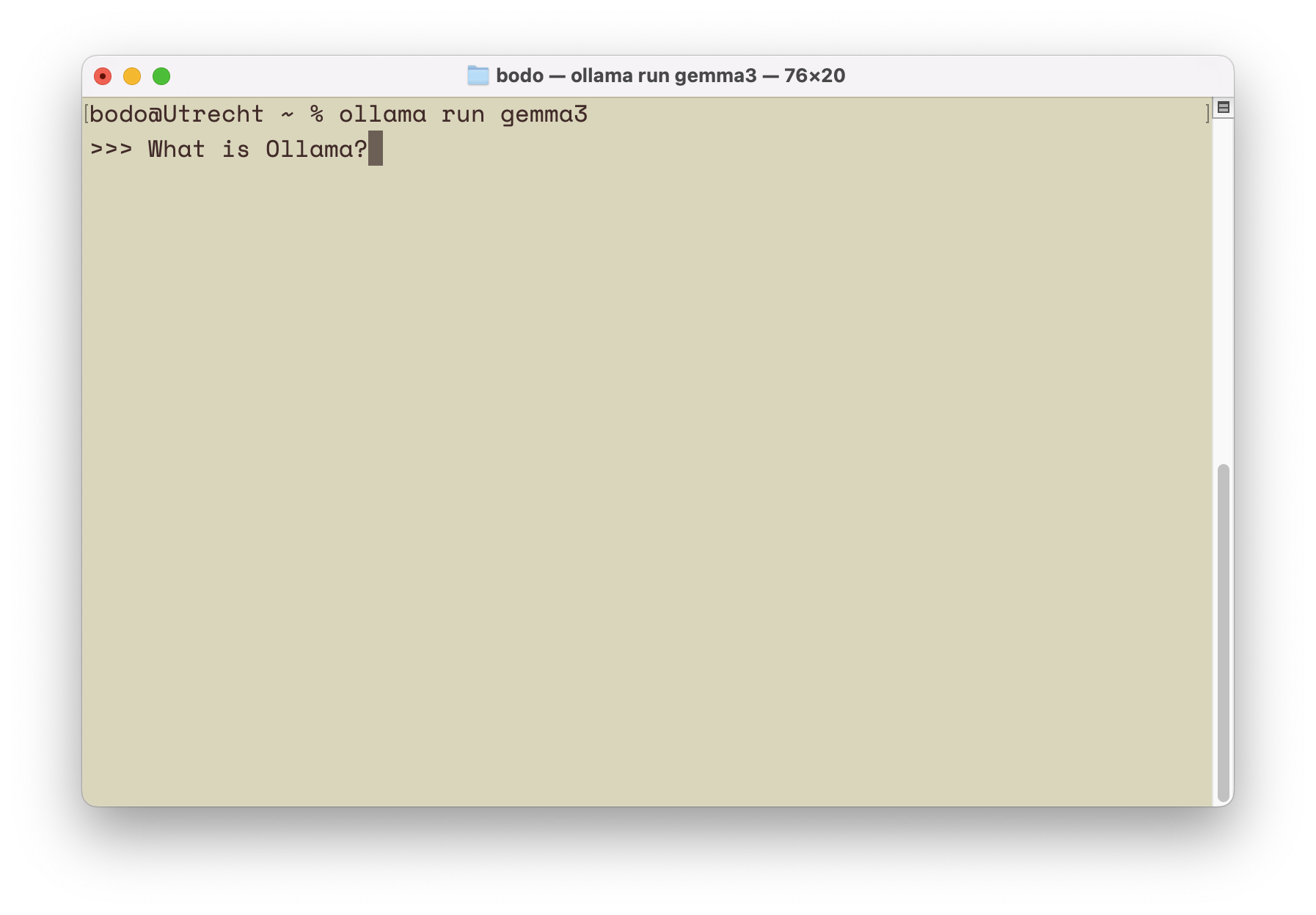

To use Ollama, start the Terminal (I will discuss the chat window further below). By simply entering the command ollama you will get an overview of the available options. I won’t discuss these in detail but will instead show how to download a Large Language Model. In this example, I will use Google’s Gemma3 model. The syntax is:

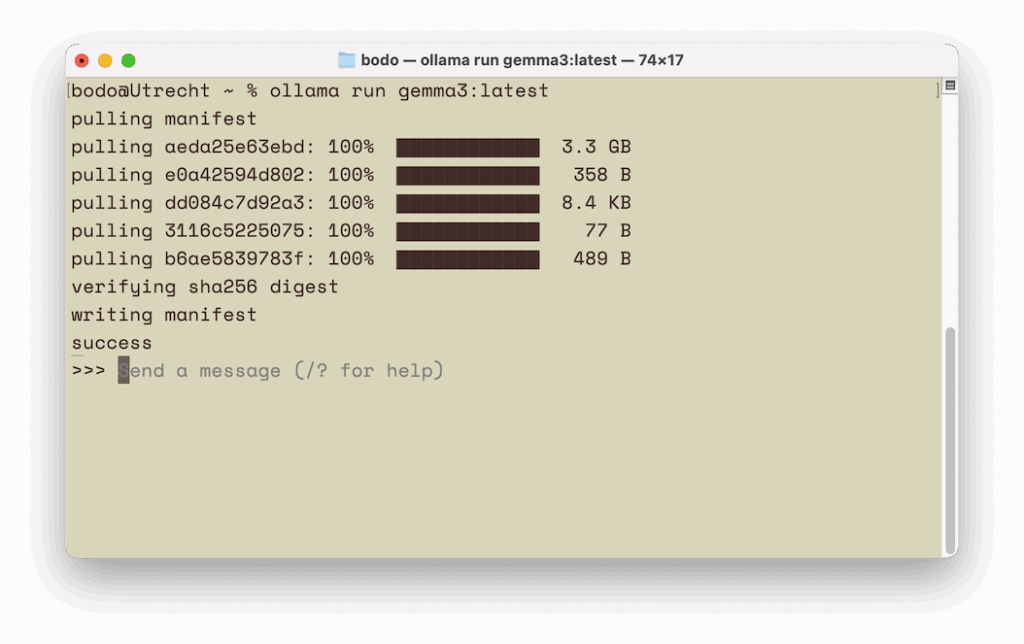

ollama run <AI Model>To download the latest version of Gemma3, use the following command:

ollama run gemma3:latest

The “:latest” can be omitted since the latest version of a model is downloaded by default. Therefore, the following input would suffice:

ollama run gemma3Running the AI Model

Executing the command checks if the AI model is already available locally. If not, it will be downloaded.

Once the download is finished (or if the model is already on the computer), you can enter text after >>>. The response will be displayed in the Terminal. You can exit the command prompt by pressing control + d or by entering /bye.

Similarly, you can also download or run a different AI model. For example, to use the AI model from Mistral, enter the following command:

ollama run mistralTo check which AI models are already installed locally, use the following command:

ollama listWhich AI Models Are Available?

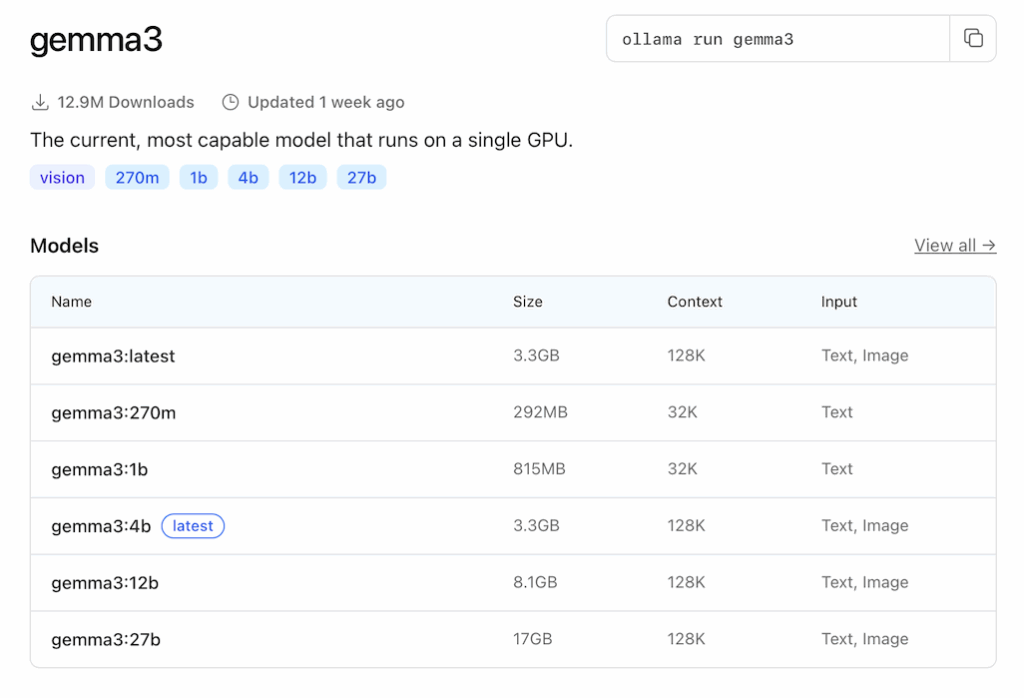

You might not know all the names of the downloadable AI language models. A look at the Ollama homepage can help. There, you can find a list of all available AI language models. Clicking on the name of a language model provides more information, including which designation to use if you don’t want the latest version of a model.

The Graphical User Interface

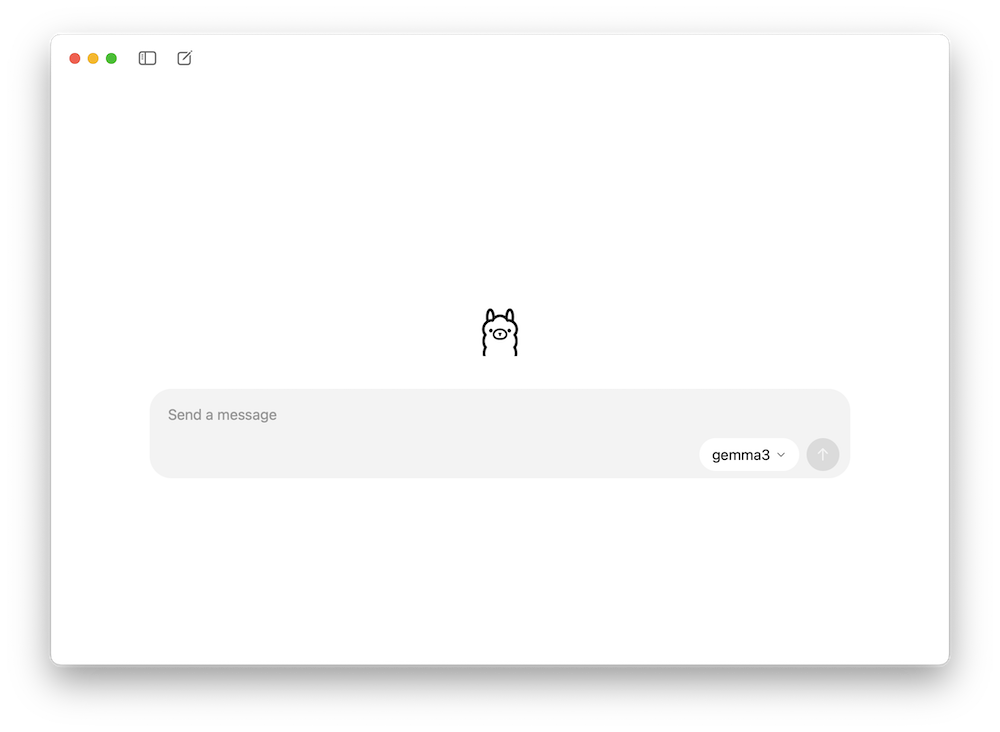

If you don’t want to deal with the Terminal, you can use a chat window very similar to ChatGPT, albeit with less functionality.

To start this window, click on the Ollama icon in the menu bar and select “Open Ollama”. In the input field, you can enter text and select a model. If not available locally, it will be downloaded.

What Kind of Mac is Needed?

In principle, any Mac with an Apple Silicon chip (e.g., M1, M2, M3, etc.) can be used. However, your Mac should have at least 16 GB of RAM; otherwise, using a local AI language model might not be enjoyable. For very large models, a more recent Pro model (e.g., M4 Pro) with a significant amount of RAM is necessary.

Conclusion

If you want to experiment with local AI language models, using Ollama is a good start. If you’re not familiar with the command line — the Terminal app — the usage might seem a bit daunting at first. However, this hurdle should be easy to overcome, especially since you only need a few commands for using and managing local AI models. If you prefer not to deal with the Terminal, a graphical user interface is now available, though it is quite minimalist.